Not every fish is worth it

Meta's about-face and why it's a math choice, beyond the politics of it

You’ve probably heard the buzz: Meta’s doing a big shake-up.

What’s allowed (and not allowed) to be said on its platforms is about to look a whole lot different. And how they’ll check and control what you say? That’s shifting too.

In complex terms, Meta is overhauling its content moderation policies (only in the US, for now).

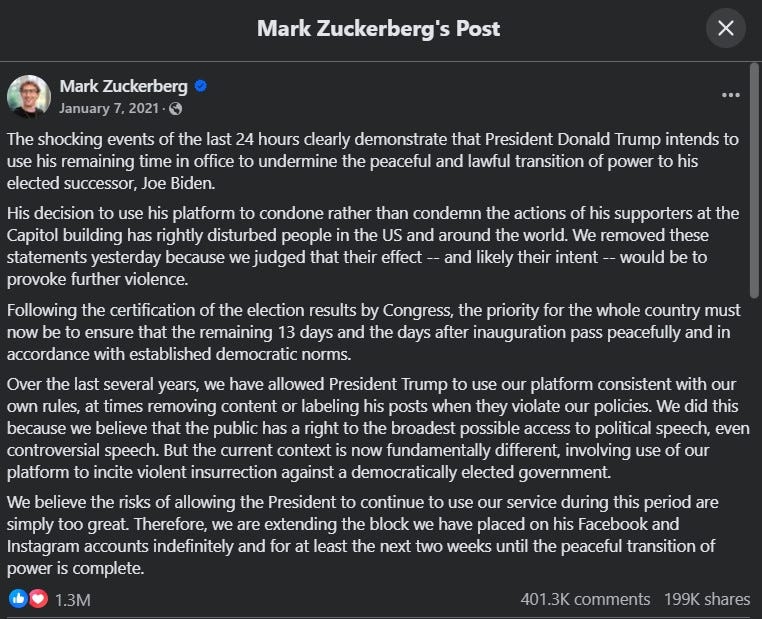

Mark Zuckerberg kept it brief - 5 minutes, to be exact:

Here’s the breakdown:

Fact-checking? Out. They’re replacing it with something called Community Notes, which sounds a lot like X’s approach. Instead of expert fact-checkers tagging content, they’re putting the responsibility in the hands of the users - free of charge, of course.

Restrictions on harmful speech? Relaxed. Now, you can say that, for instance, a gay person is mentally ill. Call it “freedom of expression,” if you wish.

Moderation overhaul. Meta’s recalibrating its automated systems to focus only on the big, truly harmful violations—like drugs or child exploitation. The rest? They’ll scrutinise less.

Politics are back in play. Meta’s resurrecting political content, which they were trying to bury just a couple of years ago, as we saw here on Artifacts.

Moderators are moving. Goodbye, California. Hello, Texas. Because, well, Texas has different ideas.

In a tagline (provided by Meta), they want ‘More Speech and Fewer Mistakes’

The comms strategy around this announcement has been pretty straightforward. In Zuck’s words, Meta wants to “get back to our roots”, which happens to coincide with (at least) 2019, when he delivered this speech, he referenced to.

Not a random year. That was before the Biden administration took the reins, when Trump was president, and when platform accountability laws—like the DSA in the EU—were still in the making. A pivotal time, indeed.

This is 100% an about-face for Meta. Remember, the fact-checking program kicked off in 2016 amidst accusations of misinformation flooding its platforms. By 2021, Meta was actively reducing political content, and Zuck wasn’t exactly holding back his disdain for Trump:

But political tides shift, and so do corporate strategies. What we’re seeing is a repositioning—Meta tracing back its steps to protect its interests and its business. Predictable? Sure. Surprising? Not so much.

Let’s leave the politics aside, though. I don’t have all the pieces to comment on that, and much has already been said.

What’s more fascinating is what lies beyond politics. Behind the mantra of “more speech and fewer mistakes” are calculations, statistical trade-offs, and artifacts—the tools and decisions shaping it all.

The Story

First, Meta isn’t changing how it handles illegal content. The definition of what’s illegal might be fuzzy and context-dependent, but it’s ultimately grounded in the law. That stays.

Yet, illegal content are just a limited part of the issues of speech online.

What Zuck seems to be changing his mind about is harmful content, which is inherently a slippery middle ground between legality and illegality.

Harmful content encompasses speech or media that isn’t outright illegal but may have negative consequences. Think of cyberbullying, disinformation, or glorification of eating disorders. These are - bear with me - also forms of freedom of expression. Which can be harmful, but still not illegal.

This is where platforms exercise discretion. They decide how safe—or how harmful—they want their spaces to be. By making these choices, they define the boundaries of their communities.

Yet, as Florent Joly brilliantly puts it, framing this as a battle between “censorship” and “free speech” is oversimplifying things. Relaxing harmful content policies isn’t just about “freedom”—it’s about the real-world consequences that come with that freedom. There’s more at stake than just free speech.

Zuck, for his part, seems to care deeply about ensuring no one gets censored. The cost of this? As Zuck puts it, they’re “gonna catch less bad stuff but also reduce the number of innocent people whose content is taken down”.

You see: fewer mistakes, more speech.

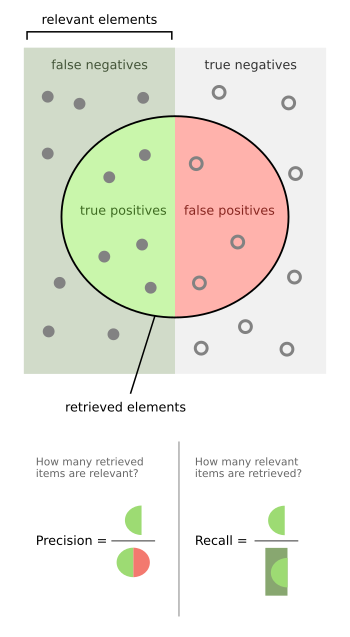

Which brings us to the concept of precision vs. recall—yes, the dreaded terms that sound more like a math exam than content moderation. But stick with me.

Now, before moving forward, it’s worth defining these two seemingly complex terms. Borrowing from Simon Cross:

“Precision” is a measure of the accuracy of a detection system.

“Recall” is a measure of the coverage of a detection system.

To make things simpler, picture a fisherman looking for fish with golden scales.

A precision fisherman casts their line carefully, catching only 20 golden fish without wasting time. They might miss a few, but every catch is golden. A recall fisherman throws a wide net, catching 100 fish to ensure no golden ones escape—but they have to sort through a lot of plain fish too.

Meta wants to be the precision fisherman. They’d rather remove 20 harmful pieces of content with near certainty than remove 100 less harmful pieces and risk taking down some innocent posts by mistake.

Content moderation is always a trade-off between precision and recall. A (new) decision has been taken at Meta. Only some very harmful fishes are worth it.

(Ok, I may be spending too much time in markets when in Paris)

This is where we are: Meta, a private company, deciding how to handle harmful content based on its own priorities.

What does this mean for us? Simply put, social media is a business. The people in charge will adjust their policies to serve their interests, even if that means relaxing standards for harmful content to maximize engagement.

As frustrating as this might be, we have to accept these decisions for what they are. While we often view online platforms as public spaces, they’re ultimately private, managed by entities whose goals don’t necessarily align with ours.

Despite years of effort to create healthier online spaces—driven by companies and, to some extent, nudged by regulators—social media platforms prioritize engagement and the time we spend there. This often comes at the expense of some harmful discourse slipping through.

This isn’t about excusing or condemning these platforms.

It’s about recognizing the realities of how they operate and the shifting incentives behind them. Expecting the same level of safety and moderation as we might in public spaces? That’s wishful thinking.

(All views expressed here are my own and do not reflect any role I hold in any organisation).

Save for Later

All the AI models rolled out in the past 12 months, here + a new AI tool to generate Wikipedia-like pages.

What’s a fact, nowadays? And why we’re not distracted but just focused on something else.

Content moderation: the tough life of humans behind it + a nice tool to play with your own policies.

TikTok was dead, then no longer. And why it has won a different battle.

Are you a founder? Better ask these questions.

A looooot of nice visuals from one of my favourite creators.

🟢 Join the Artifacts Community on WhatsApp to get other Save for Later content!

Someone said this before

“We need to understand what we mean by understand” - someone I bumped across yesterday night.

The Bookshelf

So, this is not necessarily about tech. But “The Art of Uncertainty” by David Spiegelhalter a huge book on statistics, math, fate and how we perceive luck. To learn how to compute it and cope with, eventually, uncertainty.

📚 All the books I’ve read and recommended in Artifacts are here.

Nerding

Sure, simple enough. But let’s face it—everyone’s buzzing about it. Deepseek, the latest AI sensation out of China. They built it on a shoestring budget with a handful of GPUs, yet somehow, it’s delivering shockingly impressive results.

☕?

If you want to know more about Artifacts, where it all started, or just want to connect...