Breaking the chains

And why, again, AI is pretending to think

Back in November 2022 - feels like ages ago, doesn’t it? - ChatGPT took off because it was… a chat.

Sure, OpenAI had a powerful model, but that wasn’t new. The real breakthrough? They made AI simple. No coding, no weird interfaces - just a basic, familiar format: dialogue. One of the oldest interfaces of (online) life.

Just type, hit enter, and get a response.

A lot has happened since. But one thing remains clear: the more natural (I’m not using “human” on purpose) the interaction, the more engaging AI becomes.

That’s why voice was the next big leap - why OpenAI’s advanced voice mode and NotebookLM felt so uncanny. AI now writes, speaks, listens, and draws, mimicking what we instinctively recognise as intelligence.

The missing piece? Thinking.

Or at least, something that resembles it.

And that’s where DeepSeek comes in.

The latest big name in AI didn’t just ride the wave of better models, more GPUs, and geopolitics. It introduced something else: an AI that appears to think.

Yet, this “thinking” is more a design choice. A nice one, but a design one, as claimed by Casey and Amodei. It has relatively little to do with the actual inner functioning but it reveals a lot about the training of Deepseek’s models.

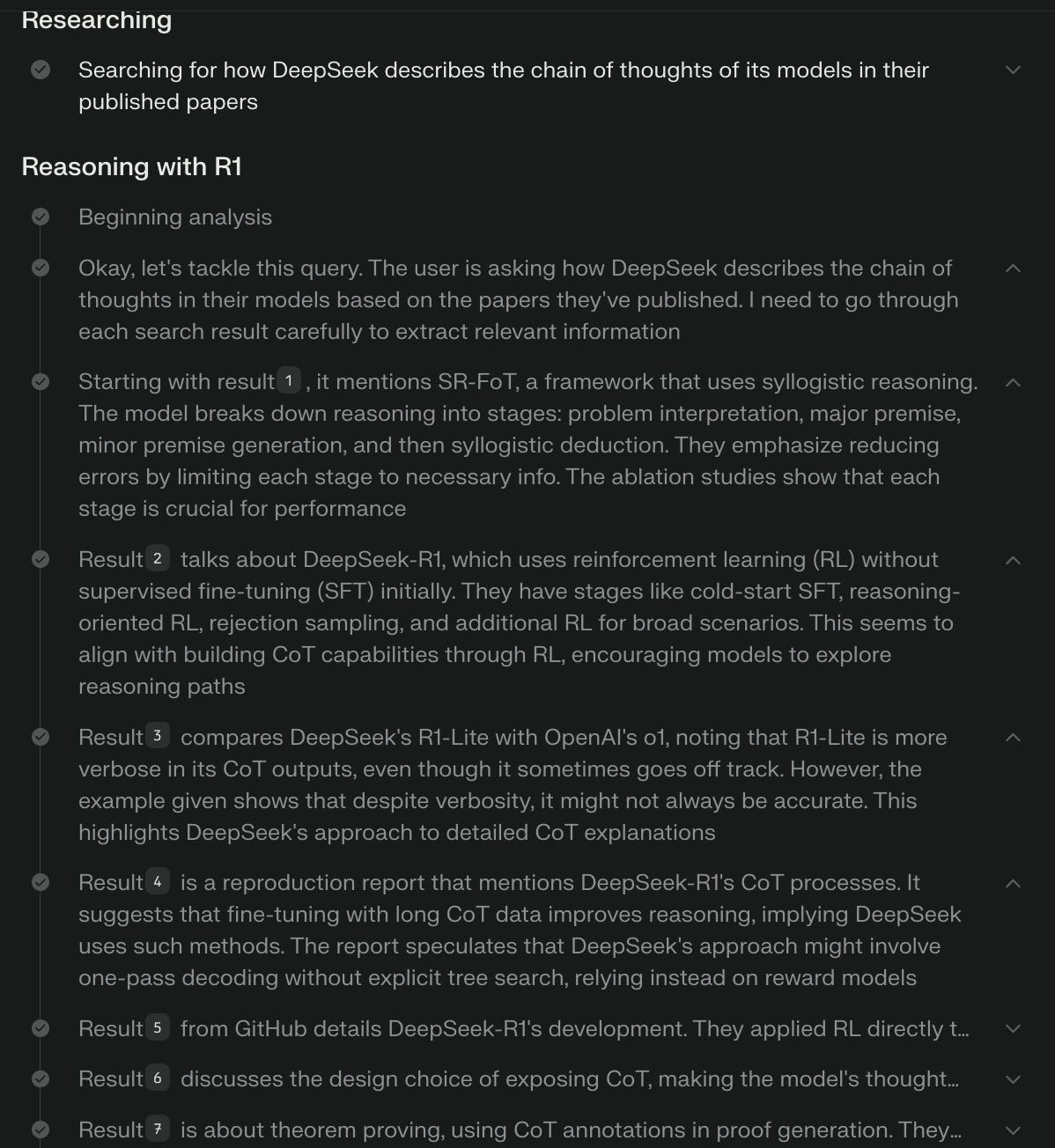

For the IT guys reading Artifacts, this DeepSeek’s “thinking” is, rather, exposing its chain of thought - what you see above.

Which is not, actually, a brand new thing as OpenAI released it before, but DeepSeek made it popular, widespread.

Is AI really thinking? What does this reveal about DeepSeek’s inner workings? And why does it matter? This Artifacts is here to unpack it all.

The History

To fully grasp what is going on, it’s good to understand what a chain of thought even is.

Chain-of-thought in DeepSeek is a reasoning technique that enables the AI models to break down complex problems into logical, sequential steps before providing a final answer. It:

articulates its thought process step by step.

reflects on its own reasoning.

even double-checks itself along the way.

Remember when AI experts told you to prompt models step by step to improve responses? Now, AI does it on its own. The gurus can rest.

What’s different about DeepSeek is how it learned this. Instead of traditional supervised learning (where models are fed labeled examples) or unsupervised learning (where they find patterns in data), DeepSeek’s training relies on reinforcement learning.

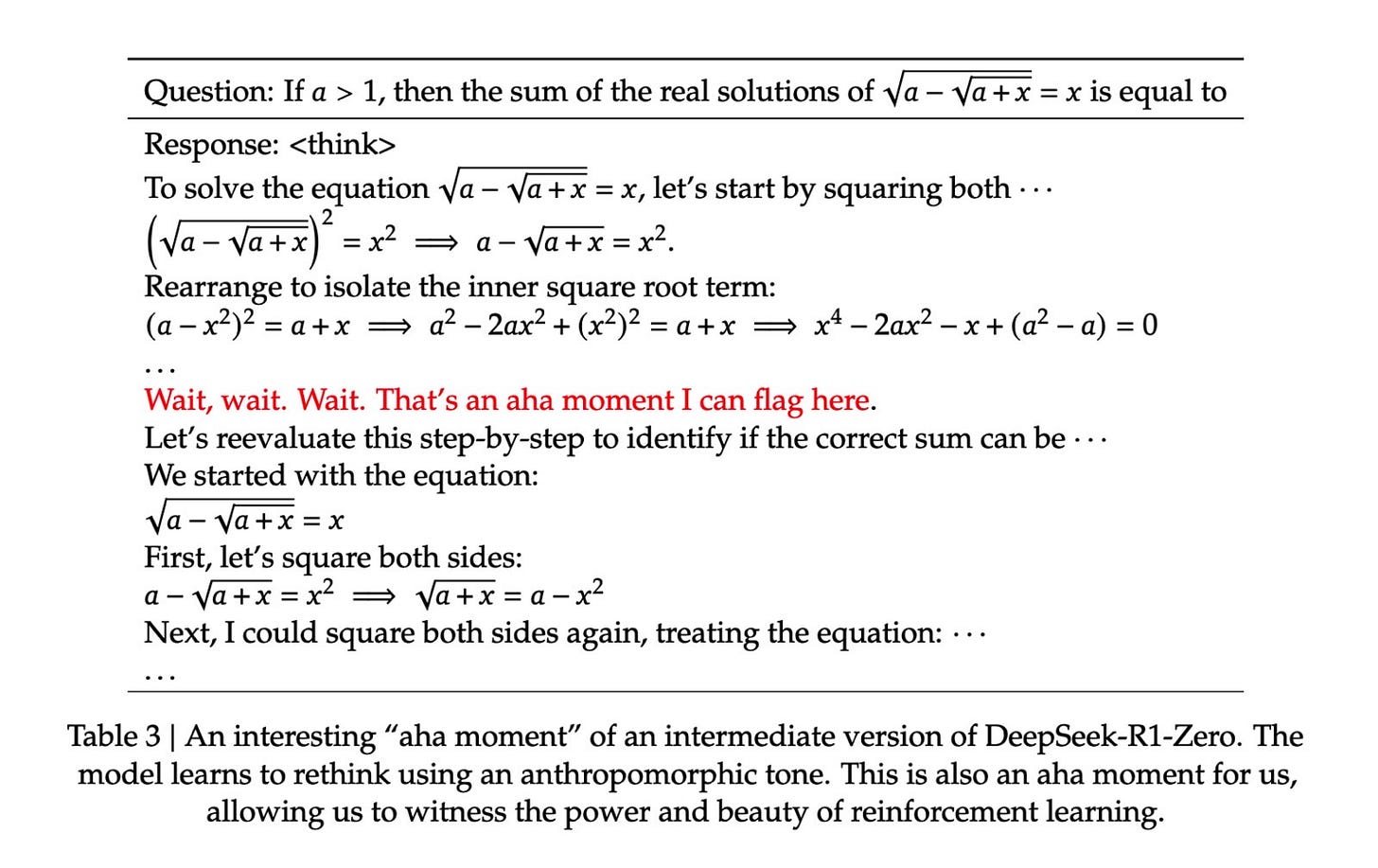

This reinforcement learning of DeepSeek is something worth scrutinising. With an example, of course, trying to unraveling their complex paper.

Imagine solving a math problem by writing down each step in a notebook. DeepSeek's AI does something similar internally, breaking complex questions into smaller steps. What's unique is how it learned this skill:

Self-Teaching Brain: Instead of being shown examples of step-by-step solutions first, the AI figured out how to break down problems on its own through trial and error.

Built-In Fact Checker: As it works through problems, the AI automatically double-checks its steps (like re-doing calculations) before finalizing answers. This wasn't programmed - the AI developed this error-checking ability naturally.

Importantly, along this process, these models earn rewards for good thinking steps, not just correct answers.

Even more importantly, DeepSeek does not rely on trial and error one step at a time but on multiple solutions simultaneously.

For each problem, it generates several potential answers, evaluates them collectively, and ranks them. By comparing different approaches, it learns to prioritize the most effective strategies over weaker ones..

In jargon, this is called Group Relative Policy Optimization (GRPO), namely like teaching a group of students who can share what they learn with each other instead of single students studying in isolation.

This process is what enables DeepSeek to display its chain of thought so clearly. It even has aha moments - instances where it retraces its steps and realizes it made a mistake.

DeepSeek has undeniably pushed AI training forward, making models appear more cautious and deliberate.

But let’s be clear: AI is not thinking. Not even close.

The chain of thought is the reflection of a real training technique, just made it digestible to human beings.

Internally, AI models do not "think" in words or sentences as we do. Instead, they process input data through layers of mathematical computations, resulting in output that can be interpreted as text.

The CoT approach is a way to structure these computations to mimic a step-by-step reasoning process, making the model's operations more interpretable to users.

So what does this mean? The exposure of a chain of thought isn’t a window into the model’s actual inner workings - it’s a design choice, a tool for making AI seem more understandable.

This may seem obvious (is it?), but it’s worth repeating. Human minds think. Statistical models don’t. Words aren’t even a concept to them—they’re just patterns.

None of this is meant to diminish DeepSeek’s achievements. In fact, making AI reasoning more transparent is a win for AI safety and usability. But we should recognize it for what it is: an interpretative layer, not a fundamental shift in machine intelligence.

Save for Later

A gallery of memes on DeepSeek.

Alternatives for legacy social media, all here

We’ll research, not search!

Running out of ideas for San Valentine? A tech guide for you.

OpenAI is doing Apple at the SuperBowl?

AI & us in the words of…the Vatican!

For the 🇮🇹s here, come join the Digital Journalism Festival organised by

in Milan on March 22nd!Someone said this before

“What you're suited for depends not just on your talents but perhaps even more on your interests.” - Paul Graham

The Bookshelf

The whole DeepSeek story is also deeply tied to chips and computational power. For full context and all the intricate details, “Chip War” is the go-to book.

📚 All the books I’ve read and recommended in Artifacts are here.

Nerding

More and more, I’m seeing intrusive bots taking part alongside their human bosses. A bit annoying, tbh. Granola fixes it, taking notes behind the scenes as if it was your Apple Notes on steroids.

☕?

If you want to know more about Artifacts, where it all started, or just want to connect...